import matplotlib.pyplot as plt

%matplotlib inline

import matplotlib_inline

matplotlib_inline.backend_inline.set_matplotlib_formats('svg')

import seaborn as sns

sns.set_context("paper")

sns.set_style("ticks")

import jax

from jax import grad, jit, vmap, jacfwd, jacrev, value_and_grad, jvp

import jax.numpy as jnp

import jax.random as jr

jax.config.update("jax_enable_x64", True)

colors = sns.color_palette()

key = jr.PRNGKey(0)

Example - Brownian Motion#

To be clear on terms and provide some background, Brownian motion was originally observed as a physical phenomenon, particle of pollen suspended in water (Einstein explains the phenomena in one of his annus mirabilis papers). The mathematical model of Brownian motion was developed by Norbert Wiener in the 1920s. Wiener was an extremely import mathematician who made significant contributions to many areas of mathematics, including stochastic processes, cybernetics, and control theory, you can read his autobiography here.

For our purposes, we will be using the terms Brownian motion and Wiener process interchangeably.

The Wiener process is a continuous-time stocahstic process that plays an important role in many parts of mathematics and physics. It is the continuous time version of a discrete random walk. Let’s go ahead and construct it as the limit of a random walk. The random walk is defined as:

where \(X_i\) are independent and identically distributed random variables with mean 0 and variance of 1 in this example. Let’s then the define the driffusively rescaled random walk as:

where \(t \in [0,1]\). The Wiener process is then defined as the limit of the driffusively rescaled random walk as \(n \to \infty\). We can simulate the Wiener process by simulating the random walk and then taking the limit, this is called the Donsker construction. Let’s go ahead and do it.

def random_walk(key, n):

"""

Generate a random walk sequence S_n based on i.i.d. random variables.

Args:

key: PRNG key for reproducibility.

n: Length of the random walk.

Returns:

Random walk sequence S_n.

"""

# Generate n i.i.d. random variables with mean 0 and variance 1

x = jr.normal(key, shape=(n,))

# Compute partial sums

S_n = jnp.cumsum(x)

return S_n

def limit_random_walk(key, n, t_points):

"""

Generate a Brownian motion (diffusively rescaled random walk).

Args:

key: PRNG key for reproducibility.

n: Number of steps in the random walk.

t_points: Array of time points in [0, 1] at which to evaluate the process.

Returns:

Array of Brownian motion values at specified t_points.

"""

# Generate random walk sequence

S_n = random_walk(key, n)

# Map t_points to indices ⌊nt⌋

indices = jnp.floor(n * t_points).astype(int)

# Rescale by √n to get diffusively rescaled random walk

W_n_t = S_n[indices] / jnp.sqrt(n)

return W_n_t

# Number of steps in random walk

n_steps = 10000

t_points = jnp.linspace(0, 1, n_steps)

# Generate Brownian motion

bm_values = limit_random_walk(key, n_steps, t_points)

# Visualize

fig, ax = plt.subplots()

ax.plot(t_points, bm_values, label="Brownian motion")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian motion")

ax.legend()

sns.despine(trim=True)

plt.show()

Now as n goes to infinity, this discrete random walk converges to the Wiener process. Try playing with the number of steps to see it converge.

Now for actually simulation the Wiener process we can use the following algorithm:

where \(Z\) is a standard normal random variable and \(\Delta t\) is the time step. Let’s go ahead and simulate the Wiener process.

# define the Brownian motion without the random walk function

def brownian_motion_1d(key, n_steps):

dt = 1.0 / n_steps

dW = jnp.sqrt(dt) * jr.normal(key, (n_steps,))

W = jnp.cumsum(dW)

return W

# Number of steps in random walk

n_steps = 10000

t_points = jnp.linspace(0, 1, n_steps)

# Generate Brownian motion

bm_values = brownian_motion_1d(key, n_steps)

# Visualize

fig, ax = plt.subplots()

ax.plot(t_points, bm_values, label="Brownian motion")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian motion")

ax.legend()

sns.despine(trim=True)

plt.show()

Notice it is the same as the limit of the driffusively rescaled random walk (if you use the same key and number of steps).

Okay, you can see how it is random, let try simulating it a few times to see how it behaves.

n_samples = 5

# Generate multiple Brownian motion samples

bm_samples = vmap(lambda key: brownian_motion_1d(key, n_steps))(jr.split(key, n_samples))

# Visualize

fig, ax = plt.subplots()

for i in range(n_samples):

ax.plot(t_points, bm_samples[i], color=colors[i], label=f"Sample {i+1}")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian motion samples")

ax.legend()

sns.despine(trim=True)

plt.show()

So starting from the same spot, the Wiener process will take different paths each time. Some basic properties of the Winer process:

\begin{align*} \mathbb{E}[W(t)] &= 0 \ \mathbb{V}[W(t)] &= t \end{align*}

Let’s go ahead and check that.

# Sample many Brownian motion paths and compute the mean and variance at each time point

n_samples = 1000

bm_samples = vmap(lambda key:

brownian_motion_1d(key, n_steps))(jr.split(key, n_samples))

mean_values = jnp.mean(bm_samples, axis=0)

var_values = jnp.var(bm_samples, axis=0)

# Visualize

fig, ax = plt.subplots()

ax.plot(t_points, mean_values, label="Mean")

ax.fill_between(t_points, mean_values - jnp.sqrt(var_values), mean_values + jnp.sqrt(var_values), alpha=0.3, label="±1 SD")

# Plot a few samples

for i in range(3):

ax.plot(t_points, bm_samples[i], color=colors[0], alpha=0.3, label=f"Sample {i+1}")

ax.hlines(1, 0, 1, color="black", linestyle="--", label="+1 std")

ax.hlines(-1, 0, 1, color="black", linestyle="--", label="-1 std")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian motion sample statistics")

ax.legend()

sns.despine(trim=True)

plt.show()

Let’s plot the statstics as a function of time.

# Plot the mean and variance a function of time

fig, ax = plt.subplots()

ax.plot(t_points, mean_values, label="Mean")

ax.hlines(0, 0, 1, color=colors[0], linestyle="--", label="True mean")

ax.plot(t_points, var_values, label="Variance")

ax.plot(t_points, t_points, color=colors[1], linestyle="--", label="True variance")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian motion sample statistics")

ax.legend(loc="center left")

sns.despine(trim=True)

plt.show()

Notice that the expectation is indeed zero and the variance increases linearly with time.

Brownian Motion in 2D#

Let’s take a look at a Brownian motion in 2D. We can simulate it by simulating two independent Wiener processes and plotting them against each other.

def brownian_motion_2d(key, n):

"""Generates a 2d Brownian motion with n steps."""

key, subkey = jr.split(key)

# Generate two independent 1D Brownian motions

W_n_t = jnp.stack([brownian_motion_1d(key, n),

brownian_motion_1d(subkey, n)], axis=1)

return W_n_t

n_steps = 1000

# Generate 2D Brownian motion

bm_2d_values = brownian_motion_2d(key, n_steps)

# Visualize

fig, ax = plt.subplots()

ax.plot(bm_2d_values[:, 0], bm_2d_values[:, 1])

ax.set_xlabel("Dimension 1")

ax.set_ylabel("Dimension 2")

ax.set_title("2D Brownian motion")

sns.despine(trim=True)

plt.show()

This would’ve been similar to the Brownian motion of particles phenomena being observed. Of course you can continue this to 3D and higher dimensions.

Brownian Bridge#

Let’s now look at the Brownian bridge. It is a Wiener process that starts at 0 and ends at 0. We can define it as:

where \(T\) is the final time. Let’s go ahead and simulate it.

def brownian_bridge_1d(key, n, t_points):

"""

Generate a Brownian bridge (conditioned Brownian motion to return to 0 at T).

Args:

key: PRNG key for reproducibility.

n: Number of steps in the Brownian motion.

t_points: Array of time points in [0, 1] at which to evaluate the process.

Returns:

Array of Brownian bridge values at specified t_points.

"""

# Generate Brownian motion using the earlier function

W_t = brownian_motion_1d(key, n)

# Brownian Bridge transformation: B(t) = W(t) - t * W(T)

# Define the end time

T = -1

W_T = W_t[T] # Value of Brownian motion at T

B_t = W_t - t_points * W_T # Apply the transformation

return B_t

n_steps = 1000

t_points = jnp.linspace(0, 1, n_steps)

# Generate Brownian bridge

bb_values = brownian_bridge_1d(key, n_steps, t_points)

t_points = jnp.linspace(0, 1, n_steps)

# Visualize

fig, ax = plt.subplots()

ax.plot(t_points, bb_values, label="Brownian bridge")

ax.plot(0, 0, marker="*", markersize=10, color="black", label="Boundaries = 0")

ax.plot(1, 0, marker="*", markersize=10, color="black")

ax.hlines(0, 0, 1, color="black", alpha=0.3, linestyle="--")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian bridge")

ax.legend()

sns.despine(trim=True)

plt.show()

If we sample it many times, we will see that the variance decreases as we get closer to the end point.

n_samples = 1000

# Generate multiple Brownian bridge samples

bb_samples = vmap(lambda key:

brownian_bridge_1d(key, n_steps, t_points))(jr.split(key, n_samples))

bb_mean = jnp.mean(bb_samples, axis=0)

bb_var = jnp.var(bb_samples, axis=0)

true_mean = jnp.zeros_like(t_points)

true_var = t_points * (1 - t_points)

# Visualize

fig, ax = plt.subplots()

for i in range(3):

ax.plot(t_points, bb_samples[i], color=colors[0], alpha=0.5, label=f"Sample {i+1}")

ax.fill_between(t_points, -jnp.sqrt(bb_var), jnp.sqrt(bb_var), alpha=0.3, label="±1 SD")

# Plot big stars at the boundaries

ax.plot(0, 0, marker="*", markersize=10, color="black", label="Boundaries = 0")

ax.plot(1, 0, marker="*", markersize=10, color="black")

ax.hlines(0, 0, 1, color="black", alpha=0.3, linestyle="--", label="True mean")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian bridge samples")

ax.legend()

sns.despine(trim=True)

plt.show()

fig, ax = plt.subplots()

ax.plot(t_points, bb_mean, label="Mean")

ax.plot(t_points, true_mean, color=colors[0], linestyle="--", label="True mean")

ax.plot(t_points, bb_var, label="Variance")

ax.plot(t_points, true_var, color=colors[1], linestyle="--", label="True variance")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian bridge sample statistics")

ax.legend()

sns.despine(trim=True)

plt.show()

How many samples do you need to take to be in agreement with the true mean and variance?

This Brownian bridge can be represented as its Karhunen-Loeve expansion. The Karhunen-Loeve expansion is a representation of a stochastic process as an infinite sum of orthogonal functions. Fun fact: these are the eigenvalues and eigenfunctions of the Poisson equation with Dirichlet boundary conditions. The Karhunen-Loeve expansion of the Brownian bridge is given by:

where \(Z_n\) are independent standard normal random variables. Let’s go ahead and simulate the Brownian bridge.

def brownian_bridge_kl(key, t_points, num_terms=100):

"""

Generate a Brownian Bridge using the Karhunen–Loève (KL) expansion.

Args:

key: PRNG key for reproducibility.

t_points: Array of time points in [0, 1] at which to evaluate the process.

num_terms: Number of terms in the KL expansion.

Returns:

Array of Brownian Bridge values at specified t_points.

"""

t_points = jnp.asarray(t_points)

n = jnp.arange(1, num_terms + 1) # KL expansion terms (1, 2, ..., num_terms)

# Generate independent Gaussian random variables ξ_n ~ N(0, 1)

keys = jr.split(key, num_terms)

xi_n = jnp.array([jr.normal(k) for k in keys])

# Compute the Brownian Bridge using the KL expansion

terms = [

xi_n[i] * jnp.sqrt(2) * jnp.sin(n[i] * jnp.pi * t_points) / (jnp.pi * n[i])

for i in range(num_terms)

]

B_t = jnp.sum(jnp.stack(terms, axis=0), axis=0)

return B_t

n_terms = 100

# Generate Brownian bridge using KL expansion

bb_kl_values = brownian_bridge_kl(key, t_points, num_terms=n_terms)

# Visualize

fig, ax = plt.subplots()

ax.plot(t_points, bb_kl_values, label="Brownian bridge (KL expansion)")

# Plot big stars at the boundaries

ax.plot(0, 0, marker="*", markersize=10, color="black", label="Boundaries = 0")

ax.plot(1, 0, marker="*", markersize=10, color="black")

ax.hlines(0, 0, 1, color="black", alpha=0.3, linestyle="--")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian bridge (KL expansion)")

sns.despine(trim=True)

ax.legend()

plt.show()

Okay, that doesn’t look quite like we want, try playing around with the number of terms to see how many it takes to look like a Brownian bridge.

Let’s see if we can recover the variance of the Brownian bridge with the expansion.

n_samples = 1000

n_terms = 1000

# Generate multiple Brownian bridge samples using KL expansion

bb_kl_samples = vmap(lambda key:

brownian_bridge_kl(key, t_points, num_terms=n_terms))(jr.split(key, n_samples))

bb_kl_mean = jnp.mean(bb_kl_samples, axis=0)

bb_kl_var = jnp.var(bb_kl_samples, axis=0)

kl_true_mean = jnp.zeros_like(t_points)

kl_true_var = t_points * (1 - t_points)

# Visualize

fig, ax = plt.subplots()

for i in range(3):

ax.plot(t_points, bb_kl_samples[i], color=colors[0], alpha=0.5, label=f"Sample {i+1}")

ax.fill_between(t_points, -jnp.sqrt(bb_kl_var), jnp.sqrt(bb_kl_var), alpha=0.3, label="±1 SD")

# Plot big stars at the boundaries

ax.plot(0, 0, marker="*", markersize=10, color="black", label="Boundaries = 0")

ax.plot(1, 0, marker="*", markersize=10, color="black")

ax.hlines(0, 0, 1, color="black", alpha=0.3, linestyle="--", label="True mean")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian bridge samples (KL expansion)")

ax.legend()

sns.despine(trim=True)

plt.show()

# Plot the mean and variance a function of time

fig, ax = plt.subplots()

ax.plot(t_points, bb_kl_mean, label="Mean")

ax.plot(t_points, kl_true_mean, color=colors[0], linestyle="--", label="True mean")

ax.plot(t_points, bb_kl_var, label="Variance")

ax.plot(t_points, kl_true_var, color=colors[1], linestyle="--", label="True variance")

ax.set_xlabel("Time")

ax.set_ylabel("Value")

ax.set_title("1D Brownian bridge sample statistics (KL expansion)")

ax.legend()

sns.despine(trim=True)

plt.show()

How many terms and samples do you need to make the KL expansion agree with the true mean and variance?

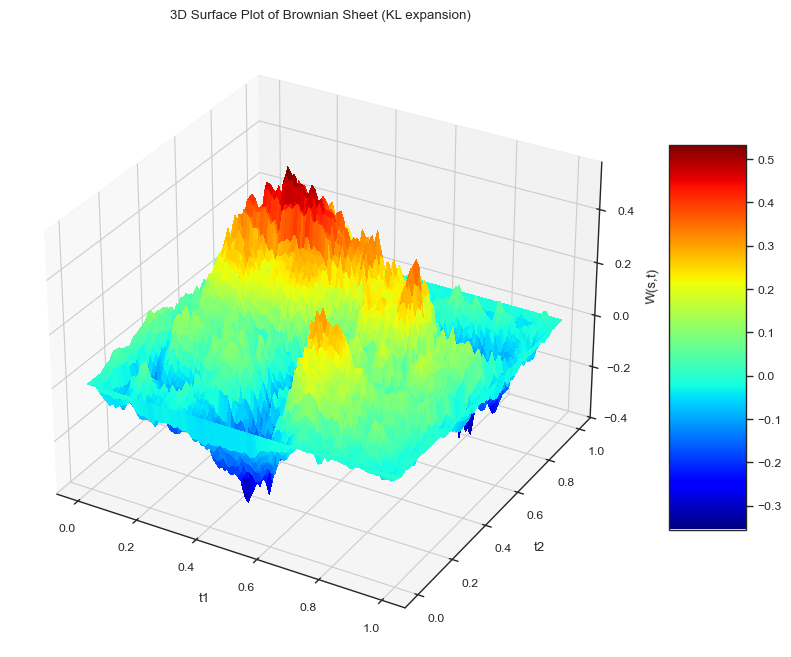

Brownian Sheet (KL Expansion)#

Next we will look at what is called a Brownian sheet, which is basically a 2D Brownian bridge. We can represent it as a Karhunen-Loeve expansion:

where \(Z_{nm}\) are independent standard normal random variables. Let’s go ahead and simulate the Brownian sheet.

def brownian_sheet_kl(key, t1, t2, num_terms=20):

"""

Generate a Brownian sheet using the Karhunen-Loève (KL) expansion.

Args:

key: PRNG key for reproducibility.

t1: 1D array of s-values in [0, 1].

t2: 1D array of t-values in [0, 1].

num_terms: Number of terms M=N in the KL expansion.

Returns:

A 2D array of shape (len(t1), len(t2)) representing the Brownian sheet.

"""

t1 = jnp.asarray(t1)

t2 = jnp.asarray(t2)

m = jnp.arange(1, num_terms + 1)

n = jnp.arange(1, num_terms + 1)

# Generate independent Gaussian random variables Z_{m,n} ~ N(0,1)

keys = jr.split(key, num_terms**2)

xi_mn = jnp.array([jr.normal(k) for k in keys]).reshape(num_terms, num_terms)

# Compute coefficients: 4/(pi^2 * m * n)

coef = 2.0 / (jnp.pi**2 * (m[:, None] * n[None, :]))

# Compute sine terms for s and t

sin_t1 = jnp.sin(jnp.pi * t1[:, None] * m[None, :]) # (S, M)

sin_t2 = jnp.sin(jnp.pi * t2[:, None] * n[None, :]) # (T, N)

# Combine random coefficients and scaling

XiC = xi_mn * coef # (M,N)

# Construct W(s,t) by summing over m,n:

# W = sin_s * XiC * sin_t^T in a matrix-multiplication sense

W_st = sin_t1 @ XiC @ sin_t2.T

return W_st

t1_points = jnp.linspace(0, 1, 100)

t2_points = jnp.linspace(0, 1, 100)

# Generate Brownian sheet using KL expansion

W_values = brownian_sheet_kl(key, t1_points, t2_points, num_terms=100)

# Create a contour plot of the Brownian sheet

T1, T2 = jnp.meshgrid(t1_points, t2_points, indexing='ij')

# 3D plot

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(111, projection='3d')

surf = ax.plot_surface(T1, T2, W_values, cmap='jet', rstride=1, cstride=1,

linewidth=0, antialiased=False)

# Add a color bar which maps values to colors.

fig.colorbar(surf, shrink=0.5, aspect=5)

ax.set_xlabel("t1")

ax.set_ylabel("t2")

ax.set_zlabel("W(s,t)")

ax.set_title("3D Surface Plot of Brownian Sheet (KL expansion)")

plt.show()

Note how the edges of the sheet are all pinned to zero, similar to the Brownian bridge. Also just from the expansion we can see that the sine functions will make the sheet zero at the edges.

If you are interested in learning more about how this relates to probability theory, you can read more about it in part 1 of Addler’s book

Questions#

Can you think of other phenomena that can be modeled as a Wiener process?

In the next notebook we will take a look at how we can use the Wiener process to model stochastic systems.