Tutorial¶

Before moving forward, make sure you understand:

- What is probability? That’s a big question... If you feel like it, I suggest you skim through E. T. Jaynes‘s book Probability Theory: The Logic of Science.

- What is MCMC?

- Read the tutorial of PyMC. To the very least, you need to be able to construct probabilistic models using this package. For advanced applications, you need to be able to construct your own MCMC step methods.

- Of course, I have to assume some familiarity with the so called Sequential Monte Carlo (SMC) or Particle Methods. Many resources can be found online at Arnaud Doucet’s collection or in his book Sequential Monte Carlo Methods in Practice. What exactly pysmc does is documented in Mathematical Details.

What is Sequential Monte Carlo?¶

Sequential Monte Carlo (SMC) is a very efficient and effective way to sample from complicated probability distributions known up to a normalizing constant. The most important complicating factor is multi-modality. That is, probability distributions that do not look at all like Gaussians.

The complete details can be found in Mathematical Details. However, let us give some

insights on what is really going on. Assume that we want so sample

from a probability distribution  , known up to a normalizing

constant:

, known up to a normalizing

constant:

Normally, we construct a variant of MCMC to sample from this

distribution. If  is multi-modal, it is certain that the Markov

Chain will get attracted to one of the modes. Theoretically, if the modes are

connected, it is guaranteed that they will all be visited

as the number of MCMC steps goes to infinity. However, depending on the

probability of the paths that connect the modes, the chain might

never escape during the finite number of MCMC steps that we can actually afford

to perform.

is multi-modal, it is certain that the Markov

Chain will get attracted to one of the modes. Theoretically, if the modes are

connected, it is guaranteed that they will all be visited

as the number of MCMC steps goes to infinity. However, depending on the

probability of the paths that connect the modes, the chain might

never escape during the finite number of MCMC steps that we can actually afford

to perform.

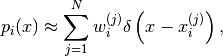

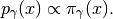

SMC attempts to alleviate this problem. The way it does it is similar to the ideas found in Simulated Annealing. The user defines a family of probability densities:

(1)

such that:

- it is easy to sample from

(either directly or using MCMC),

(either directly or using MCMC), - the probability densities

and

and  are

similar,

are

similar, - the last probability density of the sequence is the target, i.e.,

.

.

There are many ways to define such a sequence. Usually, the exact sequence that needs to be followed is obvious from the definition of the problem. An obvious choice is:

(2)

where  is a non-negative number that makes

is a non-negative number that makes  look

flat (e.g., if

look

flat (e.g., if  has a compact support, you may choose

has a compact support, you may choose

which makes

which makes  the uniform density. For

the general case a choice like

the uniform density. For

the general case a choice like  would still do a good

job) and

would still do a good

job) and  . If

. If  is chosen sufficiently large and

is chosen sufficiently large and

then indeed

then indeed  and

and  will look similar.

will look similar.

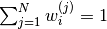

Now we are in a position to discuss what SMC does. We represent each one of the

probability densities  (1) with a particle approximation

(1) with a particle approximation

, where:

, where:

is known as the number of particles,

is known as the number of particles, is known as the weight of particle

is known as the weight of particle  (normalized so that

(normalized so that  ),

), is known as the particle

is known as the particle  .

.

Typically we write:

(3)

but what we really mean is that for any measurable

function of the state space  the following holds:

the following holds:

(4)

almost surely.

So far so good. The only issue here is actually constructing a particle approximation satisfying (4). This is a little bit involved and thus described in Mathematical Details. Here it suffices to say that it more or less goes like this:

- Start with

(i.e., the easy to sample distribution).

(i.e., the easy to sample distribution). - Sample

from

from  either directly (if possible) or

using MCMC and set the weights equal to

either directly (if possible) or

using MCMC and set the weights equal to  . Then

(4) is satisfied for

. Then

(4) is satisfied for  .

. - Compute the weights

and sample -using an appropriate MCMC

kernel- the particles of the next step

and sample -using an appropriate MCMC

kernel- the particles of the next step  so that they

corresponding particle approximation satisfies (4).

so that they

corresponding particle approximation satisfies (4). - Set

.

. - If

stop. Otherwise go to 3.

stop. Otherwise go to 3.

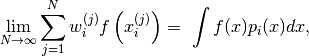

What is implemented in pysmc?¶

pysmc implements something a little bit more complicated than what is described in What is Sequential Monte Carlo?. The full description can be found in Mathematical Details. Basically, we assume that the user has defined a one-parameter family of probability densities:

(5)

The code must be initialized with a particle approximation at a desired value

of  . This can be done either manually by the user or

automatically by pysmc (e.g. by direct sampling or MCMC).

Having constructed an initial particle approximation, the code can be instructed

to move it to another

. This can be done either manually by the user or

automatically by pysmc (e.g. by direct sampling or MCMC).

Having constructed an initial particle approximation, the code can be instructed

to move it to another  . If the two probability densities

. If the two probability densities

and

and  are close, then the code

will jump directly into the construction of the particle approximation at

are close, then the code

will jump directly into the construction of the particle approximation at

. If not, then it will adaptively construct a finite

sequence of

. If not, then it will adaptively construct a finite

sequence of  ‘s connecting

‘s connecting  and

and  and jump from one to the other. Therefore, the user only needs to specify:

and jump from one to the other. Therefore, the user only needs to specify:

- the initial, easy-to-sample-from probability density,

- the target density,

- a one-parametric family of densities that connect the two.

We will see how this can be achieved through a bunch of examples.

A Simple Example¶

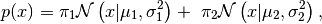

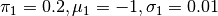

We will start with a probability density with two modes, namely a mixture of two normal densities:

(6)

where  denotes the probability density of a

normal random variable with mean

denotes the probability density of a

normal random variable with mean  and variance

and variance  .

.

is the weight given to the

is the weight given to the  -th normal

(

-th normal

( ) and

) and  are the corresponding

mean and variance. We pick the following parameters:

are the corresponding

mean and variance. We pick the following parameters:

,

, .

.

This probability density is shown in Simple Example PDF Figure. It is obvious that sampling this probability density using MCMC will be very problematic.

Plot of (6) with

and

and

.

.

Defining a family of probability densities for SMC¶

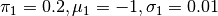

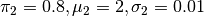

Remember that our goal is to sample (6) using SMC. Towards this goal we need to define a one-parameter family of probability densities (5) starting from a simple one to our target. The simplest choice is probably this:

(7)

Notice that: 1) for  we obtain

we obtain  and 2) for

and 2) for

small (say

small (say  ) we obtain a relatively flat

probability density. See Simple Example Family of PDF’s Figure.

) we obtain a relatively flat

probability density. See Simple Example Family of PDF’s Figure.

Plot of  of (7) for

various

of (7) for

various  ‘s.

‘s.

Defining a PyMC model¶

Since, this is our very first example we will use it as an opportunity to show

how PyMC can be used to define probabilistic models as well as MCMC sampling

algorithms. First of all let us mention that a PyMC model has to be packaged

either in a class or in a module. For the simple example we are considering, we

choose to use the module approach (see

examples/simple_model.py).

The model can be trivially defined using PyMC decorators. All we

have to do is define the logarithm of  . We will call it

mixture. The contents of that module are:

. We will call it

mixture. The contents of that module are:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | import pymc

import numpy as np

import math

@pymc.stochastic(dtype=float)

def mixture(value=1., gamma=1., pi=[0.2, 0.8], mu=[-1., 2.],

sigma=[0.01, 0.01]):

"""

The log probability of a mixture of normal densities.

:param value: The point of evaluation.

:type value : float

:param gamma: The parameter characterizing the SMC one-parameter

family.

:type gamma : float

:param pi : The weights of the components.

:type pi : 1D :class:`numpy.ndarray`

:param mu : The mean of each component.

:type mu : 1D :class:`numpy.ndarray`

:param sigma: The standard deviation of each component.

:type sigma : 1D :class:`numpy.ndarray`

"""

# Make sure everything is a numpy array

pi = np.array(pi)

mu = np.array(mu)

sigma = np.array(sigma)

# The number of components in the mixture

n = pi.shape[0]

# pymc.normal_like requires the precision not the variance:

tau = np.sqrt(1. / sigma ** 2)

# The following looks a little bit awkward because of the need for

# numerical stability:

p = np.log(pi)

p += np.array([pymc.normal_like(value, mu[i], tau[i])

for i in range(n)])

p = math.fsum(np.exp(p))

# logp should never be negative, but it can be zero...

if p <= 0.:

return -np.inf

return gamma * math.log(p)

|

This might look a little bit complicated but unfortunately one has to take care of round-off errors when sump small numbers... Notice that, we have defined pretty much every part of the mixture as an independent variable. The essential variable that defines the family of (7) is gamma. Well, you don’t actually have to call it gamma, but we will talk about this later...

Let’s import that module and see what we can do with it:

>>> import simple_model as model

>>> print model.mixture.parents

{'mu': [-1.0, 2.0], 'pi': [0.2, 0.8], 'sigma': [0.01, 0.01], 'gamma': 1.0}

The final command shows you all the parents of the stochastic variable mixture. The stochastic variable mixture was assigned a value by default (see line 4 at the code block above). You can see the current value of the stochastic variable at any time by doing:

>>> print model.mixture.value

1.0

If we started a MCMC chain at this point, this would be the initial value of the chain. You can change it to anything you want by simply doing:

>>> model.mixture.value = 0.5

>>> print model.mixture.value

0.5

To see the logarithm of the probability at the current state of the stochastic variable, do:

>>> print model.mixture.logp

-111.11635344

Now, if you want to change, let’s say, gamma to 0.5 all you have to do is:

>>> model.mixture.parents['gamma'] = 0.5

>>> print model.mixture.gamma

0.5

The logarithm of the probability should have changed also:

>>> print model.mixture.logp

-55.5581767201

Attempting to do MCMC¶

Let’s load the model again and attempt to do MCMC using PyMC‘s functionality:

>>> import simple_model as model

>>> import pymc

>>> mcmc_sampler = pymc.MCMC(model)

>>> mcmc_sampler.sample(1000000, thin=1000, burn=1000)

You should see a progress bar measuring the number of samples taken. It should

take about a minute to finish. We are actually doing  MCMC steps,

we burn the first burn = 1000 samples and we are looking at the chain

every thin = 1000 samples (i.e., we are dropping everything in between).

PyMC automatically picks a proposal (see MCMC step methods) for you. For

this particular example it should have picked

pymc.step_methods.Metropolis which corresponds to a simple random walk

proposal. There is no need to tune the parameters of the random walk since

PyMC is supposed to do that for you. In any case, it is possible to find the

right variance for the random walk, but you need to know exactly how far apart

the modes are...

MCMC steps,

we burn the first burn = 1000 samples and we are looking at the chain

every thin = 1000 samples (i.e., we are dropping everything in between).

PyMC automatically picks a proposal (see MCMC step methods) for you. For

this particular example it should have picked

pymc.step_methods.Metropolis which corresponds to a simple random walk

proposal. There is no need to tune the parameters of the random walk since

PyMC is supposed to do that for you. In any case, it is possible to find the

right variance for the random walk, but you need to know exactly how far apart

the modes are...

You may look at the samples we’ve got by doing:

>>> print mcmc_sampler.trace('mixture')[:]

[ 1.9915846 1.93300521 2.09291872 2.05159841 2.06620882 1.88901709

1.89521431 1.9631256 2.0363258 1.9756637 2.04818845 1.85036634

1.98907666 1.82212356 1.97678175 1.99854311 1.92124829 2.02077581

2.08536334 2.16664208 2.08328293 2.05378638 1.89437676 2.09555348

...

Now, let us plot the results:

>>> import matplotlib.pyplot as plt

>>> pymc.plot(mcmc_sampler)

>>> plt.show()

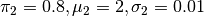

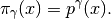

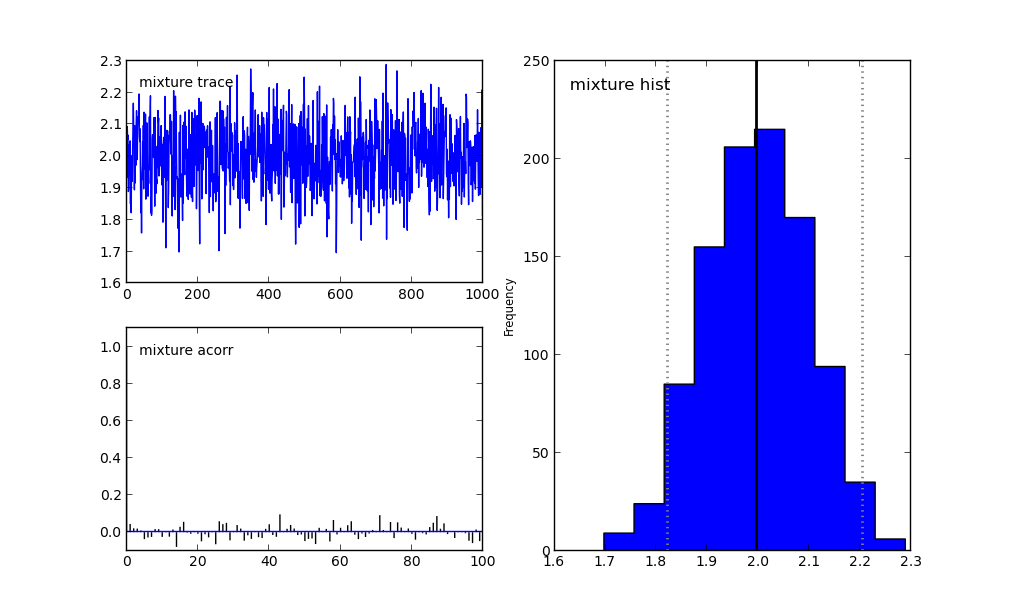

The results are shown in Simple Example MCMC Figure. Unless, you are extremely lucky, you should have missed one of the modes...

MCMC fails to capture one of the modes of (6).

Now, let’s see what it takes to make it work. Basically, what we need to

do is find the right step for the random walk proposal. Looking at

Simple Example Family of PDF’s Figure, it is easy to guess that the

right step is  . So, let’s try this:

. So, let’s try this:

>>> import simple_model as model

>>> import pymc

>>> mcmc_sampler = pymc.MCMC(simple_model)

>>> proposal = pymc.Metropolis(model.mixture, proposal_sd=3.)

>>> mcmc_sampler.step_method_dict[model.mixture][0] = proposal

>>> mcmc_sampler.sample(1000000, thin=1000, burn=0,

tune_throughout=False)

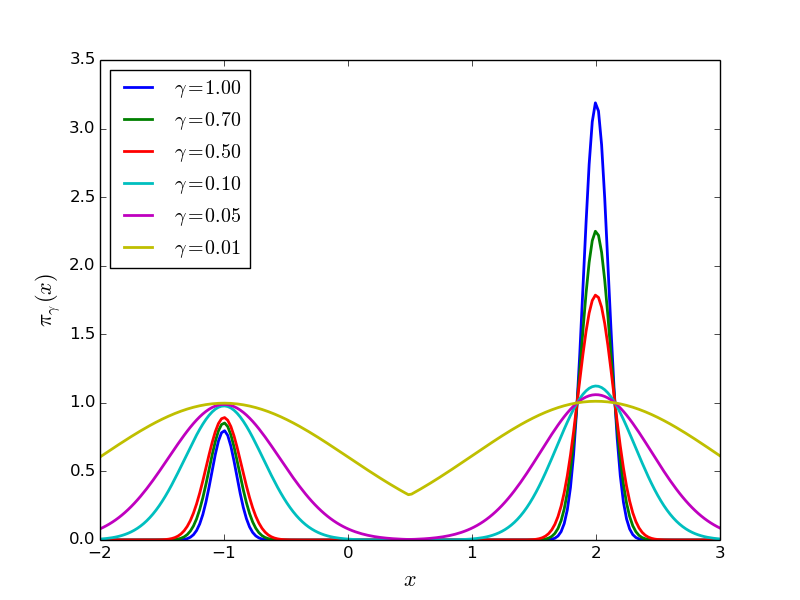

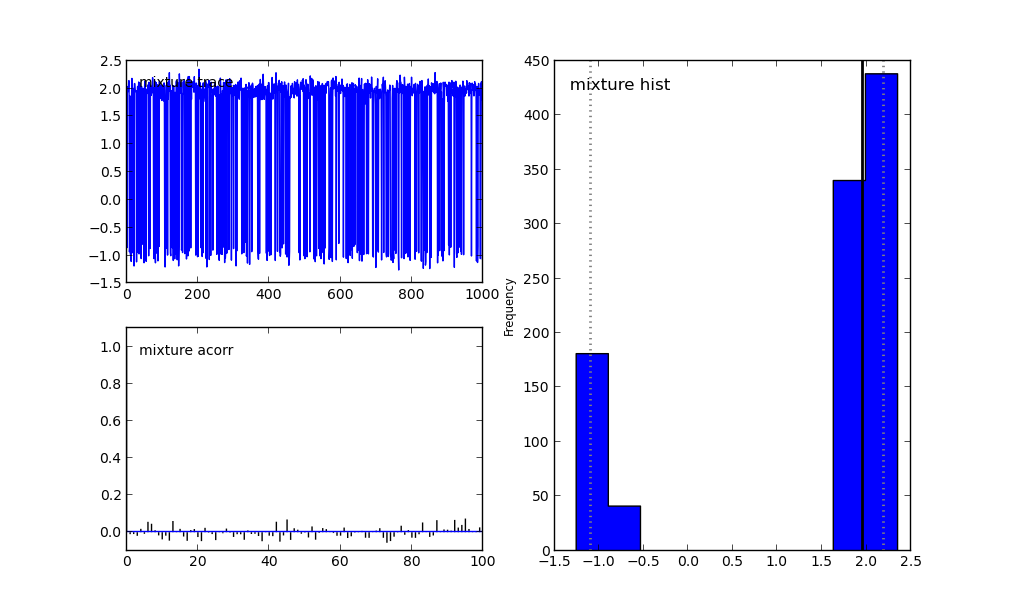

For more details on selecting/tuning step methods see MCMC step methods. In the last line we have asked PyMC not to tune the parameters of the step method. If we didn’t do that, it would be fooled again. The results are shown in Simple Example MCMC Picked Proposal Figure.

Multi-modal distributions can be captured by simple MCMC only if you have significant prior knowledge about them.

You see that the two modes can be captured by plain MCMC if we actually know how far appart they are. Of course, this is completely useless in a real problem. Most of the times, we are not be able to draw the probability density function and see where the modes are. SMC is here to save the day!

Doing SMC¶

To finish this example, let’s just see how SMC behaves. As we mentioned earlier, SMC requires:

- a one-parameter family of probability densities connecting a simple probability density to our target (we created this in simple_example_model), and

- an MCMC sampler (we saw how to create one in Attempting to do MCMC).

Now, let’s put everything together using the functionality of pysmc.SMC:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | import simple_model as model

import pymc

import pysmc

# Construct the MCMC sampler

mcmc_sampler = pymc.MCMC(model)

# Construct the SMC sampler

smc_sampler = pysmc.SMC(mcmc_sampler, num_particles=1000,

num_mcmc=10, verbose=1)

# Initialize SMC at gamma = 0.01

smc_sampler.initialize(0.01)

# Move the particles to gamma = 1.0

smc_sampler.move_to(1.0)

# Get a particle approximation

p = smc_sampler.get_particle_approximation()

# Plot a histogram

pysmc.hist(p, 'mixture')

plt.show()

|

This code can be found in examples/simple_model_run.py.

In lines 8-9, we initialize the SMC class. Of course, it requires a

mcmc_sampler which is a pymc.MCMC object.

num_particles specifies the number of particles we wish to use and

num_mcmc the number of MCMC steps we are going to perform at each

different value of  . The verbose parameter specifies

the amount of text the algorithm prints to the standard output.

There are many more parameters which are fully documented in

pysmc.SMC. In line 11, we initialize the algorithm at

. The verbose parameter specifies

the amount of text the algorithm prints to the standard output.

There are many more parameters which are fully documented in

pysmc.SMC. In line 11, we initialize the algorithm at

. This essentially performs a number of MCMC

steps at this easy-to-sample-from probability density. It constructs the

initialial particle approximation. See

pysmc.SMC.initialize() the complete list of arguments.

In line 13, we instruct the object to move the particle

approximation to

. This essentially performs a number of MCMC

steps at this easy-to-sample-from probability density. It constructs the

initialial particle approximation. See

pysmc.SMC.initialize() the complete list of arguments.

In line 13, we instruct the object to move the particle

approximation to  , i.e., to the target probability

density of this particular example. To see the weights of the final

particle approximation, we use pysmc.SMC.weights

(e.g., smc_sampler.weights). To get the particles themselves we may

use pysmc.SMC.particles (e.g., smc_sampler.particles) which

returns a dictionary of the particles. However, it is usually most

convenient to access them via pysmc.SMC.get_particle_approximation()

which returns a pysmc.ParticleApproximation object.

, i.e., to the target probability

density of this particular example. To see the weights of the final

particle approximation, we use pysmc.SMC.weights

(e.g., smc_sampler.weights). To get the particles themselves we may

use pysmc.SMC.particles (e.g., smc_sampler.particles) which

returns a dictionary of the particles. However, it is usually most

convenient to access them via pysmc.SMC.get_particle_approximation()

which returns a pysmc.ParticleApproximation object.

The output of the algorithm looks like this:

------------------------

START SMC Initialization

------------------------

- initializing at gamma : 0.01

- initializing by sampling from the prior: FAILURE

- initializing via MCMC

- taking a total of 10000

- creating a particle every 10

[---------------- 43% ] 4392 of 10000 complete in 0.5 sec

[-----------------83%----------- ] 8322 of 10000 complete in 1.0 sec

----------------------

END SMC Initialization

----------------------

-----------------

START SMC MOVE TO

-----------------

initial gamma : 0.01

final gamma : 1.0

ess reduction: 0.9

- moving to gamma : 0.0204271802459

- performing 10 MCMC steps per particle

[------------ 31% ] 3182 of 10000 complete in 0.5 sec

[-----------------62%--- ] 6292 of 10000 complete in 1.0 sec

[-----------------93%--------------- ] 9322 of 10000 complete in 1.5 sec

- moving to gamma : 0.0382316662144

- performing 10 MCMC steps per particle

[---------- 28% ] 2822 of 10000 complete in 0.5 sec

[-----------------55%- ] 5542 of 10000 complete in 1.0 sec

[-----------------81%----------- ] 8162 of 10000 complete in 1.5 sec

- moving to gamma : 0.0677677161458

- performing 10 MCMC steps per particle

[--------- 24% ] 2442 of 10000 complete in 0.5 sec

[-----------------47% ] 4732 of 10000 complete in 1.0 sec

[-----------------70%------ ] 7032 of 10000 complete in 1.5 sec

[-----------------91%-------------- ] 9172 of 10000 complete in 2.0 sec

- moving to gamma : 0.118156872826

- performing 10 MCMC steps per particle

[-------- 21% ] 2122 of 10000 complete in 0.5 sec

[--------------- 42% ] 4202 of 10000 complete in 1.0 sec

[-----------------62%--- ] 6232 of 10000 complete in 1.5 sec

[-----------------81%----------- ] 8172 of 10000 complete in 2.0 sec

- moving to gamma : 0.206496296978

- performing 10 MCMC steps per particle

[----- 15% ] 1502 of 10000 complete in 0.5 sec

[------------ 33% ] 3332 of 10000 complete in 1.0 sec

[-----------------48% ] 4882 of 10000 complete in 1.5 sec

[-----------------66%----- ] 6602 of 10000 complete in 2.0 sec

[-----------------82%----------- ] 8252 of 10000 complete in 2.5 sec

[-----------------96%---------------- ] 9692 of 10000 complete in 3.0 sec

- moving to gamma : 0.326593174468

- performing 10 MCMC steps per particle

[------ 16% ] 1642 of 10000 complete in 0.5 sec

[------------ 32% ] 3252 of 10000 complete in 1.0 sec

[-----------------48% ] 4842 of 10000 complete in 1.5 sec

[-----------------64%---- ] 6412 of 10000 complete in 2.0 sec

[-----------------79%---------- ] 7942 of 10000 complete in 2.5 sec

[-----------------94%--------------- ] 9432 of 10000 complete in 3.0 sec

- moving to gamma : 0.494246909688

- performing 10 MCMC steps per particle

[----- 14% ] 1402 of 10000 complete in 0.5 sec

[---------- 28% ] 2832 of 10000 complete in 1.0 sec

[---------------- 42% ] 4232 of 10000 complete in 1.5 sec

[-----------------56%- ] 5622 of 10000 complete in 2.0 sec

[-----------------69%------ ] 6992 of 10000 complete in 2.5 sec

[-----------------83%----------- ] 8342 of 10000 complete in 3.0 sec

[-----------------96%---------------- ] 9662 of 10000 complete in 3.5 sec

- moving to gamma : 0.680964613537

- performing 10 MCMC steps per particle

[---- 13% ] 1302 of 10000 complete in 0.5 sec

[--------- 25% ] 2552 of 10000 complete in 1.0 sec

[-------------- 38% ] 3832 of 10000 complete in 1.5 sec

[-----------------50% ] 5032 of 10000 complete in 2.0 sec

[-----------------62%--- ] 6272 of 10000 complete in 2.5 sec

[-----------------75%-------- ] 7502 of 10000 complete in 3.0 sec

[-----------------86%------------ ] 8672 of 10000 complete in 3.5 sec

[-----------------98%----------------- ] 9842 of 10000 complete in 4.0 sec

- moving to gamma : 1.0

- performing 10 MCMC steps per particle

[---- 11% ] 1172 of 10000 complete in 0.5 sec

[-------- 23% ] 2302 of 10000 complete in 1.0 sec

[------------ 34% ] 3412 of 10000 complete in 1.5 sec

[-----------------44% ] 4482 of 10000 complete in 2.0 sec

[-----------------55%- ] 5582 of 10000 complete in 2.5 sec

[-----------------67%----- ] 6702 of 10000 complete in 3.0 sec

[-----------------77%--------- ] 7792 of 10000 complete in 3.5 sec

[-----------------88%------------- ] 8892 of 10000 complete in 4.0 sec

[-----------------99%----------------- ] 9972 of 10000 complete in 4.5 sec

---------------

END SMC MOVE TO

---------------

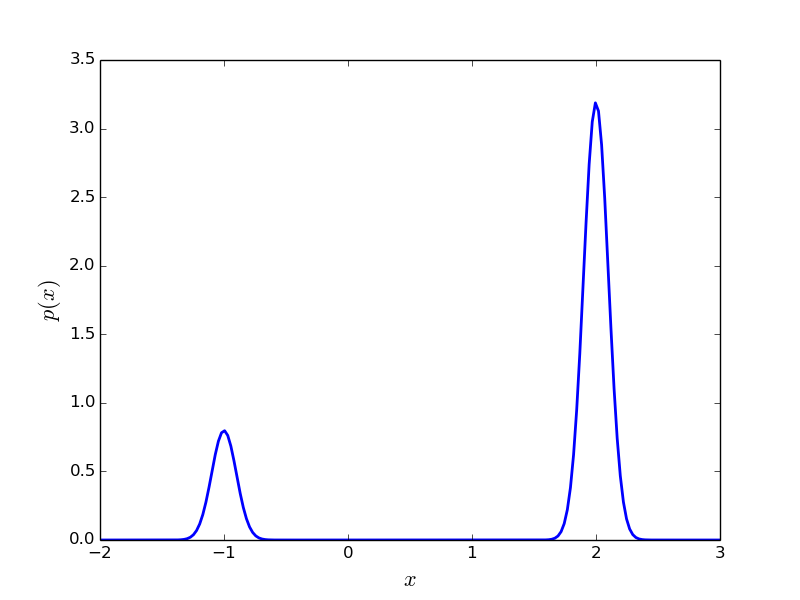

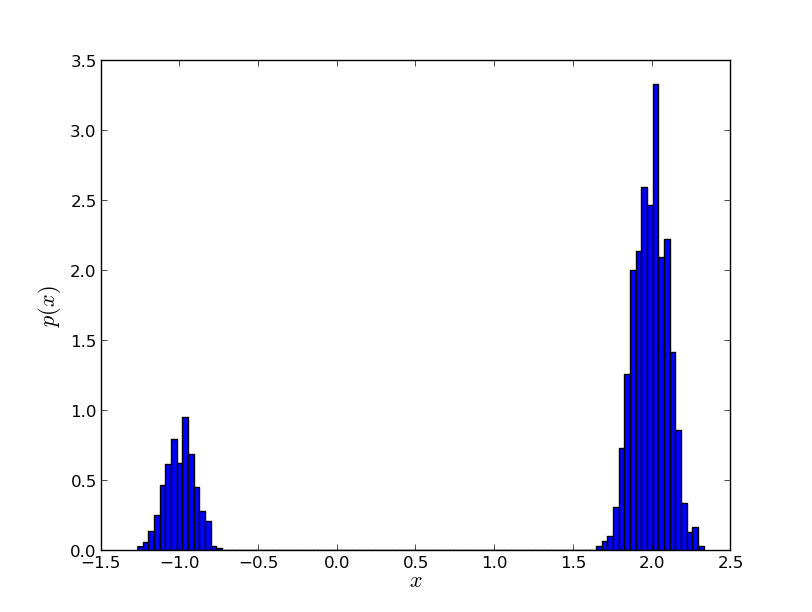

The figure you should see is shown in Simple Example SMC Histogram Figure. You see that SMC has no problem discovering the two modes, even though we have not hand-picked the parameters of the MCMC proposal.

SMC easily discovers both modes of (6)

Initializing SMC with just the model¶

It is also possible to initialize SMC without explicitly specifying a pymc.MCMC. This allows PyMC to select everything for you. It can be done like this:

1 2 3 4 5 6 7 | import simple_model as model

import pysmc

# Construct the SMC sampler

smc_sampler = pysmc.SMC(model, num_particles=1000,

num_mcmc=10, verbose=1)

# Do whatever you want to smc_sampler...

|

Dumping the data to a database¶

At the moment we support only a very simple database (see pysmc.DataBase) which is built on cPickle. There are two options of pysmc.SMC that you need to specify when you want to use the database functionality: db_filename and update_db. See the documentation of pysmc.SMC for their complete details. If you are using a database, you can force the current state of SMC to be written to it by calling pysmc.commit(). If you want to access a database, the all you have to do is use the attribute pysmc.db. Here is a very simple illustration of what you can do with this:

1 2 3 4 5 6 7 8 9 | import simple_model as model

import pysmc

smc_sampler = pysmc.SMC(model, num_particles=1000,

num_mcmc=10, verbose=1,

db_filename='db.pickle',

update_db=True)

smc_sampler.initialize(0.01)

smc_sampler.move(0.5)

|

As you have probably grasped until now, this will construct an initial

particle approximation at  and it will move it to

and it will move it to

. The db_filename option specifies the file on which

the database will write data. Let us assume that the file does not exist.

Then, it will be created. The option update_db specifies that SMC should

commit data to the database everytime it moves to another

. The db_filename option specifies the file on which

the database will write data. Let us assume that the file does not exist.

Then, it will be created. The option update_db specifies that SMC should

commit data to the database everytime it moves to another  .

Now, assume that you quit python. The database is saved in db.pickle.

If you want to continue from the point you had stopped, all you have to do

is:

.

Now, assume that you quit python. The database is saved in db.pickle.

If you want to continue from the point you had stopped, all you have to do

is:

1 2 3 4 5 6 7 8 | import simple_model as model

import pysmc

smc_sampler = pysmc.SMC(model, num_particles=1000,

num_mcmc=10, verbose=1,

db_filename='db.pickle',

update_db=True)

smc_sampler.move(1.)

|

Now, we have just skipped the initialization line. Sine the database already

exists, pysmc.SMC looks at it and initializes the particle

approximation from the last state stored in it. Therefore, you actually

start moving from  to

to  .

.

Making a movie of the particles¶

If you have stored the particle approximations of the various

‘s in a database, then it is possible to make a movie out of

them using pysmc.make_movie_from_db(). Here is how:

‘s in a database, then it is possible to make a movie out of

them using pysmc.make_movie_from_db(). Here is how:

1 2 3 4 5 6 7 8 9 10 11 | import pysmc

import matplotlib.pyplot as plt

# Load the database

db = pysmc.DataBase.load('db.pickle')

# Make the movie

movie = make_movie_from_db(db, 'mixture')

# See the movie

plt.show()

# Save the movie

movie.save('smc_movie.mp4')

|

The movie created in this way can be downloaded from videos/smc_movie.mp4.

Sampling in Parallel¶

With pysmc it is possible to carry out the sampling procedure in

parallel. As is well known, SMC is embarransingly parallelizable. Taking it to

the extreme, its process has to deal with only one particle. Communication is

only necessary when there is a need to resample the particle approximation

and for finding the next  in the sequence of probability

densities. Parallelization in pysmc is achieved with the help of

mpi4py. Here is a simple example:

in the sequence of probability

densities. Parallelization in pysmc is achieved with the help of

mpi4py. Here is a simple example:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | import pysmc

import simple_model as model

import mpi4py.MPI as mpi

import matplotlib.pyplot as plt

# Construct the SMC sampler

smc_sampler = pysmc.SMC(model, num_particles=1000,

num_mcmc=10, verbose=1,

mpi=mpi, gamma_is_an_exponent=True)

# Initialize SMC at gamma = 0.01

smc_sampler.initialize(0.01)

# Move the particles to gamma = 1.0

smc_sampler.move_to(1.)

# Get a particle approximation

p = smc_sampler.get_particle_approximation()

# Plot a histogram

pysmc.hist(p, 'mixture')

if mpi.COMM_WORLD.Get_rank() == 0:

plt.show()

|

As you can see the only difference is at line 3 where mpi4py is instantiated, line 8 where mpi is passed as an argument to pysmc.SMC and the last two lines where we simply specify that only one process should attempt to plot the histogram.